LANGUAGE:

- WHAT WE DO

- AI

- Industries

Content Services

- Technical Writing

- Training & eLearning

- Financial Reports

- Digital Marketing

- SEO & Content Optimization

Translation Services

- Video Localization

- Software Localization

- Website Localization

- Translation for Regulated Companies

- Interpretation

- Instant Interpreter

- Live Events

Testing Services

- Functional QA & Testing

- Compatibility Testing

- Interoperability Testing

- Performance Testing

- Accessibility Testing

- UX/CX Testing

Solutions

- Translation Service Models

- Machine Translation

- Smart Onboarding™

Our Knowledge Hubs

- Positive Patient Outcomes

- Modern Clinical Trial Solutions

- Future of Localization

- Innovation to Immunity

- COVID-19 Resource Center

- Disruption Series

- Patient Engagement

- Lionbridge Insights

Life Sciences

- Pharmaceutical

- Clinical

- Regulatory

- Post-Approval

- Corporate

- Medical Devices

- Validation and Clinical

- Regulatory

- Post-Authorization

- Corporate

Banking & Finance

Retail

Luxury

E-Commerce

Games

Automotive

Consumer Packaged Goods

Technology

Industrial Manufacturing

Legal Services

Travel & Hospitality

SELECT LANGUAGE:

Generative AI technology is changing the delivery of global events and streaming multimedia content for the better, making it possible to reach more people more easily and more affordably than ever. Whether your target audience’s first language is Italian, Japanese, or American Sign Language (ASL), you can communicate in real time via interpretation and captioning technology.

Lionbridge webinar attendees got to experience how the technology works first-hand as Lionbridge’s moderator, Will Rowlands-Rees, led a lively discussion on generative AI for global events with panelists representing Dell, VMware, Zendesk, and CSA Research.

If you missed the session, you can watch it on demand. The webinar was the third in a series on generative AI and language services. To view the recordings of other webinars in this series, visit the Lionbridge webinars page.

Short on time right now? Then, read on for some highlights.

What Was Once Fantasy Is Now Reality

It’s challenging to engage a wide audience and reach accessibility goals for webinars, meetings, and training. It’s even more difficult when your target audience speaks many languages.

However, the rapid advancement of AI tools is making these goals increasingly within reach.

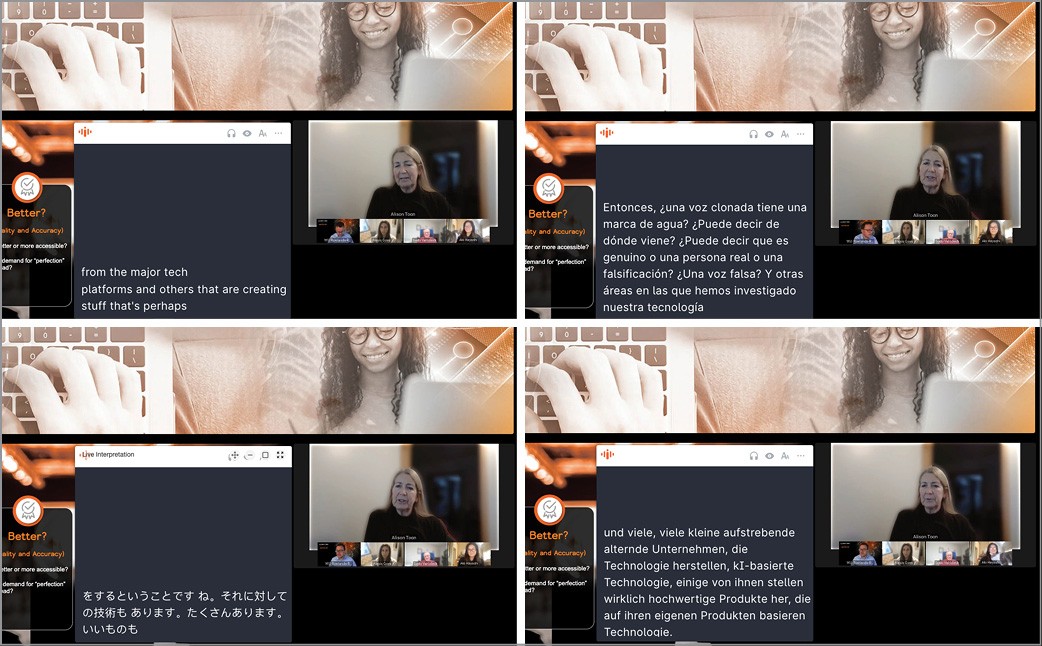

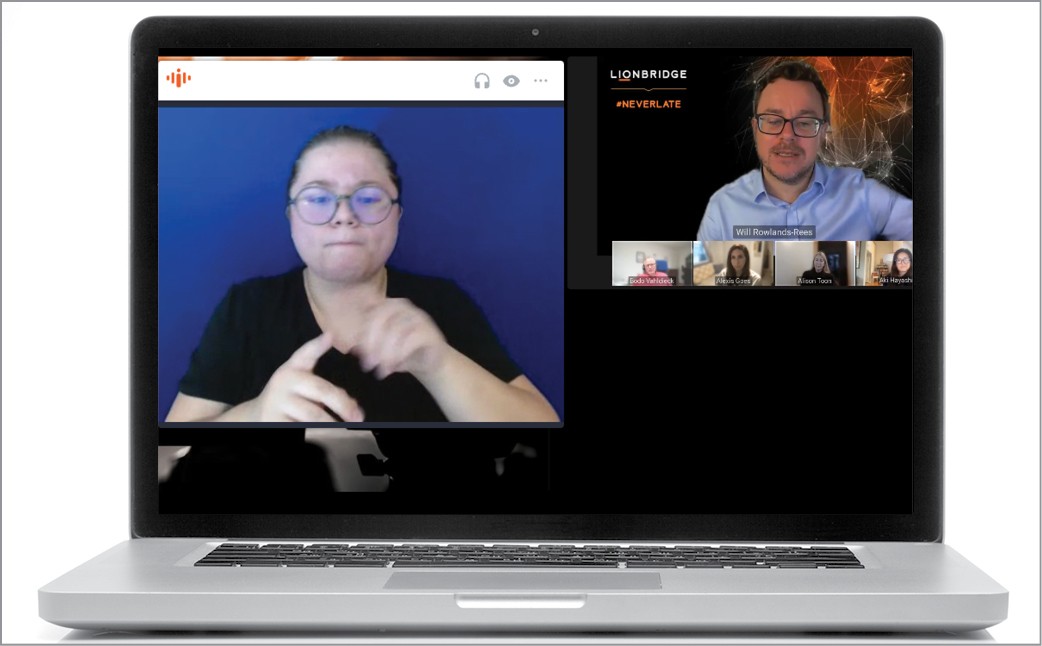

Lionbridge demonstrated how to optimize live events with AI-generated speech translation, multilingual captioning, and sign language powered by Interprefy.

Webinar attendees could obtain:

Remote Simultaneous Interpretation (RSI) in Spanish, French, and Japanese.

AI voice translation in Italian, Korean, and German.

Captioning in all the above languages.

What exactly did these services look like? See the screenshots below to understand how non-English-speaking attendees could easily follow along.

The event also accommodated those whose first language is ASL.

Topics and Takeaways

The discussion was wide-ranging, including topics centering on emerging technologies, the balance between perfection and speed, and the security of AI solutions.

Among the key takeaways:

You can’t think of virtual events and AI as separate things.

Think about the kinds of accessibility you provide differently for live events and the replays you make available after the event.

It’s okay to ask people up front if they need an accessibility-related option, but then you must deliver on it.

Don’t overlook English. Even if your event is in English, you must be mindful of the quality of your English transcriptions and subtitling.

As platforms emerge, users must be mindful of security and privacy.

Understand where ownership of information lies and what type of longevity that information has after the event for the people providing AI or human-based interpretation.

The Last Word

Each panelist had the opportunity to leave the audience with a piece of advice, provocative thought, or question.

Dell’s Aki Hayashi encouraged listeners to think about event globalization holistically. She urged attendees to provide translated content from the beginning of the customer engagement to the end, including translated emails, agendas, and registrations, so the global audience will know what is available in their language and how to access it.

CSA Research’s Alison Toon cautioned attendees to differentiate between established and start-up businesses when testing tools. She acknowledged that some immature businesses have excellent ideas, but they may have immature business practices that you need to watch for.

VMware’s Bodo Vahldieck anticipates that in a year, an event summary will be standard in all video platforms, adding value to videos. People will be able to determine quickly if recorded content will interest them. Companies with outstanding content will have an opportunity to engage their customers more than they were able to do previously.

Perhaps the session's most soul-searching question came from Zendesk's Alexis Goes.

“What will the role of Language Service Providers and localization teams look like if platforms start providing these solutions natively?” Alexis asked.

Alexis pointed to:

Zoom’s automated captions.

Spotify’s AI voice translations for podcasts.

Chrome’s plug-in for automated captioning for videos and meetings you watch through the browser.

While these tools will increasingly enhance accessibility and aid non-English speakers, she pondered what happens when capabilities are available to and from any language.

“It’s a good thought for people to ponder and really make sure in this changing world, they’re reevaluating where they can bring the most value to their organizations,” Will Rowlands-Rees concluded. “[The panelists] have shared great insights to have helped with that.”

Get in touch

Want to explore generative AI opportunities and start using AI tools for global events and streaming multimedia content? Reach out to us today to find out how.