LANGUAGE:

- WHAT WE DO

- AI

- Industries

Content Services

- Technical Writing

- Training & eLearning

- Financial Reports

- Digital Marketing

- SEO & Content Optimization

Translation Services

- Video Localization

- Software Localization

- Website Localization

- Translation for Regulated Companies

- Interpretation

- Instant Interpreter

- Live Events

Testing Services

- Functional QA & Testing

- Compatibility Testing

- Interoperability Testing

- Performance Testing

- Accessibility Testing

- UX/CX Testing

Solutions

- Translation Service Models

- Machine Translation

- Smart Onboarding™

Our Knowledge Hubs

- Positive Patient Outcomes

- Modern Clinical Trial Solutions

- Future of Localization

- Innovation to Immunity

- COVID-19 Resource Center

- Disruption Series

- Patient Engagement

- Lionbridge Insights

Life Sciences

- Pharmaceutical

- Clinical

- Regulatory

- Post-Approval

- Corporate

- Medical Devices

- Validation and Clinical

- Regulatory

- Post-Authorization

- Corporate

Banking & Finance

Retail

Luxury

E-Commerce

Games

Automotive

Consumer Packaged Goods

Technology

Industrial Manufacturing

Legal Services

Travel & Hospitality

SELECT LANGUAGE:

What exactly are generative AI (GenAI) and Large Language Model (LLM) technologies? How will GenAI and LLMs, such as ChatGPT, disrupt translation and localization? How can you use LLMs to upgrade global content workflows?

Lionbridge’s Vincent Henderson, leader of the product and development teams, answered these questions and more during the first in a series of webinars on generative AI and Large Language Models.

If you missed our webinar, watch it on demand.

Short on time right now? Read about some of the topics Vincent addressed during the webinar.

What Is Generative AI and Large Language Models?

Generative AI and LLMs are Artificial Intelligence (AI) engines that have learned how humans write text from the corpus of the internet. Give it an input, and it will complete any given text with the most probable next piece of text from its training.

Based on new AI, these technologies decide what to output by using everything they know based on a massive amount of data.

Though their ability to determine the most plausible subsequent output may seem trivial, the task is highly sophisticated. When the text comes in, the model can look at it holistically and figure out how it fits within the global picture of language to produce its output. It determines what is most important in the prompt and training corpus and what needs attention.

As a result, LLMs have the uncanny ability to produce text that appears to have been created by humans. This technology seems to understand our intent and think and act like us.

What Can LLMs Do?

Based on its learning, an LLM, such as ChatGPT, can do several things:

- It answers questions — Give it text as a question, and it will provide the most plausible text that constitutes an answer.

- It follows instructions — Give it a set of instructions, and it will output the likeliest result of how this instruction would be applied.

- It learns from examples — Give it a few examples and input the same format, and it will provide the most plausible piece of text, applying the example format to the input information.

Why Are LLMs So Impactful for Translation?

To understand what makes LLMs like ChatGPT advantageous for translation and localization, let’s first examine some of the challenges associated with automated translation when using Neural Machine Translation (NMT).

To date, companies have relied on Machine Translation (MT) engines, highly specialized Large Language Models optimized to take a string of words and determine what translation corresponds to it. Companies using a generic MT engine that produced suboptimal results could improve those results by finetuning the engine’s training using tens of thousands of relevant data.

The undertaking is costly, and companies using MT engines must continuously determine whether retraining the engine is worth the effort and expense each time they start a new initiative, such as a new product launch or marketing effort.

Conversely, LLMs learn what a company expects from only a few examples because these engines already know so much. They can also apply their learning to new undertakings. As a result, desired translation results can be achieved with much lighter, context-aware prompting and without task-specific model training involving large amounts of data.

However, at the time of this writing, the LLMs with these capabilities are under a lot of demand pressure, and they can't yet cater to the volumes of content involved in industrial-scale localization. This situation will evolve soon, but the timing is uncertain.

Do LLMs or MT Engines Perform Translation Better?

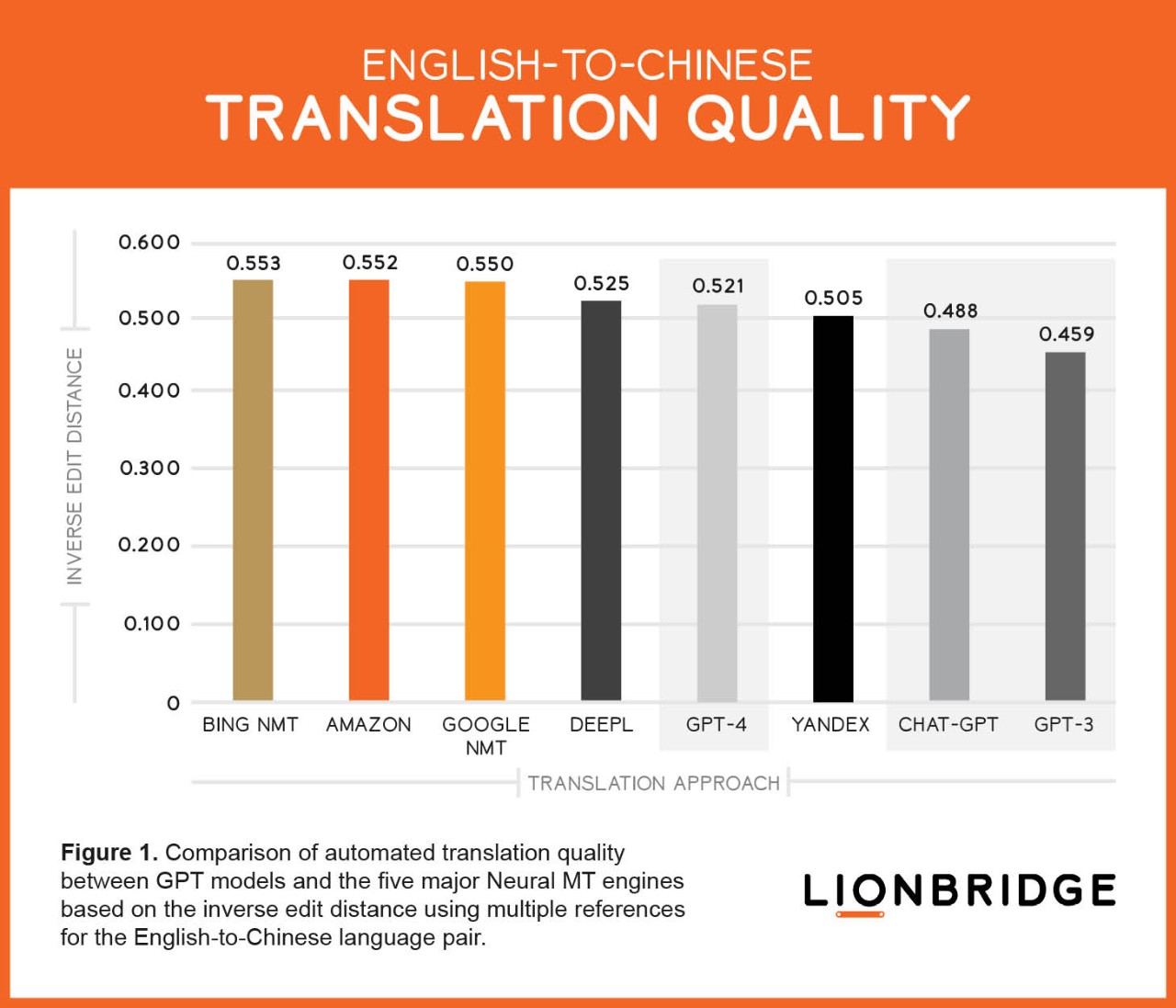

How does the raw translation performance of LLMs compare to MT engines? Lionbridge compared the translation quality produced by the major MT engines and GPT models for a sentence in English to Chinese, English to Spanish, and English to German.

Generally, GPT does not perform as well as the best current MT engines. But the LLMs come close. In one instance, as shown in Figure 1, GPT-4 slightly outperformed the Yandex MT engine for the English-to-Chinese language pair.

To see other comparative results of engines based on various domains and languages, visit the Lionbridge Machine Translation Tracker, the longest-standing measure of the overall performance of automated translation.

How Else Do LLMs Like GPT Compare to the Major MT Engines?

Vocabulary, grammar, and accuracy

Without specific instructions, LLMs like GPT translate more vernacularly than major MT engines. This practice may be at odds with how professional translators operate and may be considered a mistake. Also, GPT can sometimes make up neologisms, or new expressions, which translators avoid.

Getting LLMs to use a particular terminology is easy. Simply ask them to do it. In contrast, getting MT engines to incorporate terminology takes more work. It involves training the MT engine or building a superstructure above the MT engine to inject the terminology into the results or source. Typically, this practice will generate issues with conjugation or concordance.

Because GPT is so language-aware, it rarely makes language errors, such as concordance agreement errors. It will typically correct itself if asked.

What Else Can Large Language Models Do?

Source or target analysis and improvement

LLMs are versatile. Not only can you use LLMs to make the target text better, but you can also use them to improve the source. And analysis extends beyond quantitative views of frequencies, volumes, and lengths to qualitative evaluation and automatic improvements. Instruct LLMs to do things a certain way to produce the desired result.

Use ChatGPT to:

- Simplify complex terminology or rewrite content more simply for improved readability and translatability

- Shorten long sentences without changing the meaning for improved readability and translatability

Using LLMs to make content easier to read makes it more accessible to audiences. Improving the source and reducing word count can decrease your localization costs.

Post-editing and language quality assurance

You can ask LLMs to post-edit your translations like you would ask a professional translator to do. Do LLMs do a good job of post-editing? One analysis found that it significantly reduced the effort to get a sentence to its final version, or edit distance, from 48 percent to 32 percent. It can also find errors like extra spaces, suggest better word choices, and improve the target text with a rewrite.

How Can Language Service Providers Like Lionbridge Support Upgrades To Content Workflows Resulting From Generative AI?

Generative AI will drastically change workflows for multilingual content. Lionbridge can provide services associated with these changes.

Multilingual content generation services

The ability of LLMs to generate multilingual content will arguably be the most significant disruption to the localization world since the introduction of Translation Memories (TMs).

Here’s how LLMS can generate multilingual content from scratch: Give LLMs information. Ask them to produce derivative content and to produce that content multilingually from scratch.

LLMs will enable companies to generate product descriptions, tweets, and other material based on data you already have, so you don’t risk getting hallucinations that LLMs tend to produce when you ask them about real things.

Historically, global content workflows have been based on two separate workflows, one for the domestic production of content and one for the global production of content. When using LLMs, you’re not producing derivative content by first employing writers in your source language and then starting your localization workflow. You produce the derivative content in all the languages you desire and then post-edit the text with post-editors in each language, including your domestic language.

Lionbridge is well-suited to provide multilingual post-editing services based on multilingually generated content because of our extensive crowd of translators.

Support for customers’ multilingual generative AI initiatives

Using LLMs to generate multilingual content requires prompt engineering, which is time-consuming and often involves trial and error. Helping companies build suitable prompts is a category of services arising in localization and an area Lionbridge can assist with.

Lionbridge can simplify AI use with backend development and help customers curate the type of content they use as examples and prompts for multilingual generative AI initiatives they run for themselves.

Optimization of multilingual assets

GPT can modify linguistic assets, such as Translation Memories (TMs) and stylistic rules.

Lionbridge leveraged GPT-4 to informalize a whole French Translation Memory (TM), adapting tone and style to the customer’s specifications in a more affordable manner than previously possible.

Case Study Details

Improvements to localization workflows

This category pertains to the use of LLMs to make post-editing easier, faster, and cheaper and, therefore, in the end, to make the whole translation workflow more effective and more cost-effective.

Improving localization workflows makes the concept of localizing everything more accessible to companies.

The Bottom Line: What’s the Outlook for the Future?

LLMs will disrupt localization. Over time, workflows will flatten.

Instead of operating under separate domestic and global workflows, companies will be able to define content goals, plan content, and generate content immediately in multiple languages.

While new technologies often provoke fear of job elimination, Lionbridge is not worried about the possibility of LLMs upending Language Service Providers (LSPs) or the need for translators.

Companies using LLMs to generate content multilingually will still require domain experts to review the machine output. It’s post-editing for Machine Translation. We might call it something else in the future.

“The whole language industry must respond to the challenge LLMs present. It’s exciting. We’re at the dawn of an explosive multiplication of use cases that LLM technology can address.”

— Vincent Henderson, Lionbridge Head of Product, Language Services

To learn more about generative AI and see it in action via demos, watch the webinar now.

Get in touch

If you’d like to explore how Lionbridge can help you create efficiencies for your global content needs using the latest technology, contact us today.